Hadoop

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models.

The following topics discuss how to work with Hadoop implementations:

General information about Hadoop can be found at the official website.

About Hadoop

Logi Analytics has strategic partnerships with the industry's Big Data technology leaders for analytical and Hadoop data stores: HP Vertica, Amazon Redshift, ParStream, Hortonworks, and Cloudera.

Hadoop is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high availability, the library itself is designed to detect and handle failures at the application layer, delivering a highly-available service on top of a cluster of computers.

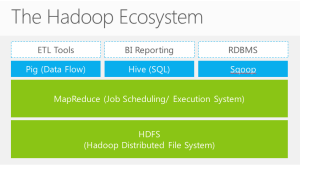

Logi Info accesses data in real-time, through an ODBC connector in "Hive", a Hadoop component which facilitates querying and managing large datasets residing in distributed storage. Hive projects structure onto this data and queries the data using a SQL-like language called HiveQL. At the same time this language also allows traditional map/reduce programmers to plug in custom mappers and reducers when it is inconvenient or inefficient to express this logic in HiveQL.

Logi Studio's SQL Query Builder tool works well with HiveQL, to help build managed reports quickly and efficiently.

This topic presents techniques for connecting Logi Info applications to Hadoop implementations, such as Cloudera CDH4 and Hortonworks, and discusses the details of setting up Cloudera Kerberos authentication.